I've just gone through the pain of downloading each of the files required to extract the Visual Studio 2010 aka Rosario September CTP VPC. Why couldn't they use the Microsoft Download Manager??

Anyway, first things first, I opened Visual Studio 2010 and went to the New Project dialog. The first thing that I noticed was that the MVC Framework is missing. Now I'm not surprised as it is yet to be released, and I don't doubt that it'll make it to the final release, but it's a bit disappointing all the same as it means I'll have to settle for bad old asp.net when I get to the web stuff.

The new options that stand out to me are the WiX project options, nice to see that getting shipped with Visual Studio, and Modelling Projects (which currently just has an empty project). Being curious to see what this is this Modelling Project is my first point of call.

Choosing to create a new Empty Modelling Project presents me with this:

I get a Model Explorer on the left and in the Solution Explorer I can see that I have been given a ModelDefinition folder. Opening this folder reveals a single file - ModelDefinition.uml. When I right click and choose to Add a File I have a number of different types of model presented to me. These are:

- Activity Diagram

- Component Diagram

- Layer Diagram

- Logical Class Diagram

- Sequence Diagram

- Use Case Diagram

My first thoughts are that it's a useful enough set of diagrams to work from. I'm a little surprised not to see a State Machine available, especially given the way that Workflow Foundation might have some obvious synergy with this type of model. There's also no sign of support for OCL, but I'm less surprised by this (and certainly not disappointed). I've also never come across a 'Layer Diagram' diagram before, and a quick look at the OMG UML Superstructure specification doesn't seem to mention it - though maybe I just can't spot it there. That being the case the Layer Diagram seems to be like a logical place to start playing.

The Layer Diagram seems to be about showing the various layers that will make up an application, and the dependencies between those layers. It has 3 patterns available out of the box that you can drag and drop on; 3 layer, 4 layer, and MVC. Seeing the MVC pattern there is nice, but I'm not sure how useful this is. How many applications end up wth just these three layers, hmmm, maybe if you're following the ActiveRecord pattern but otherwise you're likely to have some form of Repository/DAO layer I would think. So being a generally DDD guy the first 'layer' pattern that I've tried to put together follows an Onion/Hexagonal pattern.

Ok, so why is this better than what I can do with basic shapes in Visio. Well, one thing is that I can specify acceptable namespaces for each layer (and unacceptable ones), drag and drop projects onto the layer from the solution explorer, which associates the project with that layer. Now if I specify an invalid namespace in a class file then when the model is validated I get a series of errors telling me of the bad namespace naming. Now I've got to say that this feature on its' own is not exactly compelling. Yes, I like to ensure compliance to conventions across the dev team(s) that I work with, but I wouldn't create this diagram just for that purpose. Also, I haven't created this in the way that I normally would - I typically follow the examples provided by Kyle Bailey and Jeffrey Palermo. With this I wasn't sure of how to represent the utilities that would normally be present. I was also tempted to break some of the layers up and make this more like a project diagram, a temptation I resisted. Another feature I spotted was the ability to put layers inside of layers, so I could have dropped the MVC layer inside of my UI layer, if I did though then suddenly for me my UI layer would contain layers that are typically just folders inside of a project for me. Maybe I'm just not 'getting it', or I'm just not working on big enough systems)(though I'd question the ability to manage the complexity of a system with many more layers that represented collections of projects. I guess that when more features are added to this type of model then its' real potential for usefulness will become clear. At the moment I'm not really sold on it.

Looking at the xml that is used to represent the diagram behind the scenes makes me worried about the potential merge conflicts that might emerge should two people ever work on the same artifact at the same time. This is something that I think I'll definitely check out soon as it has a real impact on how practical something like this can be when you are working as a part of a team.

Next up is a sequence diagram. These are diagrams that I use a lot and typically I use Enterprise Architect to create them. I've tried using Visio and always just end up incredibly frustrated at the lack of even basic support for this type of artifact.

Well, the less than headline news is that this is nowhere near being Enterprise Architect so Sparx Systems can sleep easy for at least another release of Visual Studio. On dragging a lifeline on to the surface I am able to specify that I am dealing with an actor. I am not able to specify that it is a boundary however which is something that I use quite a lot. Support for messaging seems to be ok with synchronous and asynchronous messages easily added into the diagram. Self messaging is also nicely catered for. Creating objects is handled ok, I prefer it when the arrow points to the box rather than to the lifeline itself, but I'm not that fussy. I can't see that it is possible to denote deletion from another object but I guess that given garbage collection in managed applications this doesn't ever really happen as such. I also can't see that it's possible to add constraints (OCL or otherwise). Frames seem to be supported through the 'Interaction Use' which I am assuming is an interaction frame.

Currently Guard clauses don't seem to be possible which is a bit of a miss. This is probably because the only operator currently available for the interaction frame is 'ref' and I'd need at least support for 'alt', 'loop', 'opt', and 'par' before I could make much practical use of the sequence diagram facility. Still there's at least a year to go before release so I hope that they do expand this feature. Also it would be nice to be able to drag a class on to the designer and then use it. With Enterprise Architect I can reverse my code base into a model and then get to select from classes and their available methods when I am putting the model together. I don't expect this feature to be comparable to Enterprise Architect when it releases, but if it is to be more than a gimmick there is a very long way to go. The good news for Microsoft (I think) is that I'm already more inclined to use this CTP than the UML features of Visio 2007 (which are just bloody awful), they may not be as feature rich yet, but they already feel more right.

Another post to follow as I continue to explore this CTP.

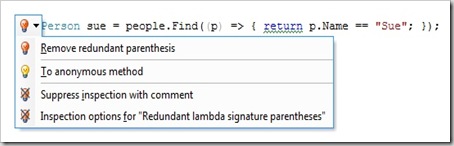

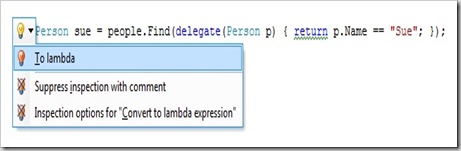

Note that it is offering to convert my code back to a lambda

Note that it is offering to convert my code back to a lambda